Chapter 2 Discrete Planning

Chapter 2 Discrete Planning

2.1 Introduction to Discrete Feasible Planning

2.1.1 Problem Formulation

Formulation 2.1

State:

State space: , nonempty, finite or infinite.

Action:

Action space: ,

State transition fuction: . Each current state x, when applied with each action u, produces a new state x'.

Initial state:

Goal state:

2.1.2 Examples of Discrete Planning

- Moving on a 2D gird

Suppose that a robot moves on a grid in which each grid point has integer coordinates of the form (i, j). The robot takes discrete steps in one of four directions (up, down, left, right), each of which increments or decrements one coordinate. The motions and corresponding state transition graph are shown in Figure 2.1, which can be imagined as stepping from tile to tile on an infinite tile floor. This will be expressed using Formulation 2.1. Let X be the set of all integer pairs of the form , in which (Z denotes the set of all integers). Let . Let . The state transition equation is , in which and are treated as two-dimensional vectors for the purpose of addition. For example, if x = (3, 4) and u = (0, 1), then f (x, u) = (3, 5). Suppose for convenience that the initial state is . Many interesting goal sets are possible. Suppose, for example, that . It is easy to find a sequence of actions that transforms the state from (0, 0) to (100, 100).

- Rubik's Cube Puzzle

2.2 Searching for Feasible Plans

The methods presented in this section are just graph search algorithms, but with the understanding that the state transition graph is revealed incrementally through the application of actions, instead of being fully specified in advance.

An important point is that the search algorithms must be systematic, that is, the algorithm must keep track of states already visited.

2.2.1 General Forward Search

The following figure gives a general template of search algorithms. At any point during the search, there will be three kinds of states:

Unvisited: States that have not been visited yet. Initially, this is every state except xI.

Dead: States that have been visited, and for which every possible next state has also been visited. A next state of is a state for which there exists a such that In a sense, these states are dead because there is nothing more that they can contribute to the search. In some circumstances, of course, dead state can become alive again.

Alive: States that have been encountered, but possibly have unvisited next states. These are considered alive. Initially, the only alive state is .

Forward Search

Q.insert(x_I) and mark x_I as visited.

while Q not empty do:

x = Q.GetFirst()

if x in x_G:

return SUCCESS

for all u in U(x):

x' = f(x,u)

if x' not visited:

Q.insert(x')

mark x' visited

else:

Resolve duplicate x'

Return FAILURE

The above is a general template of forward search algorithm. Two focuses are presented here: How efficient is the test to determine whether in line 4? How can one tell whether has been visited in line 8 and line 9?

2.2.2 Particular Forward Search Methods

This section presents several search algorithms, each of which constructs a search tree. Each search algorithm is a special case of the algorithm of the forward search algorithm template demonstrated before, obtained by defining a different sorting function for Q. Most of these are just classical graph search algorithms.

Breath First: Specify Q as a First-In First-Out (FIFO) queue. All plans that have k steps are exhausted before plans with k + 1 steps are investigated. Therefore, breadth first guarantees that the first solution found will use the smallest number of steps. The asymptotic running time of breadth-first search is .

Depth First: Specify Q as a First-In Last-Out (FILO) stack. The running time of depth first search is also .

Dijkstra’s algorithm: Use cost-to-come (distance between initial state and current state), short for Function C, to sort Q.

A*: Incorporate a heuristic estimate of the cost called cost-to-go (distance between current state and goal state), short for with .

Best first: Only use to sort Q.

Iterative deepening: An approach integrates Breath first and Depth first method. That means performs Depth first search at i depth (i=1, 2, 3....max depth). Initially, i is equal to 1 and will increase with step going on. For example, if i = 1, the algorithm cannot find . Then i will be 2, perform the same operation. If we still cannot find the solution, i will be 3 until we reach .

2.2.3 Other General Search Schemes

- Backward search: For many planning problems, it might be the case that the branching factor is large when starting from xI. In this case, it might be more efficient to start the search at a goal state and work backward until the initial state is encountered.

BACKWARD SEARCH

Q.insert(x_G) and mark x_G as visited.

while Q not empty do:

x = Q.GetFirst()

if x in xI:

return SUCCESS

for all u^-1 in U(x)^-1:

x' = f^-1(x,u^-1)

if x' not visited:

Q.insert(x')

mark x' visited

else:

Resolve duplicate x'

Return FAILURE

- Bidirectional search: One tree is grown from the initial state, and the other is grown from the goal state. The search terminates with success when the two trees meet. Failure occurs if either priority queue has been exhausted.

BIDIRECTIONAL SEARCH

Q_G.insert(X_G) and mark x_G as visited.

Q_I.insert(X_I) and mark x_I as visited.

while Q_G and Q_I not empty do:

x = Q_I.GetFirst()

if x already visited from x_G

return SUCCESS

for all u in U(x):

x' = f(x,u)

if x' not visited:

Q_I.insert(x')

mark x' visited

else:

Resolve duplicate x'

x = Q_G.GetFirst()

if x already visited from x_I

return SUCCESS

for all u^-1 in U(x)^-1:

x' = f^-1(x,u^-1)

if x' not visited:

x_G.insert(x')

mark x' visited

else:

Resolve duplicate x'

Return FAILURE

2.2.4 A Unified View of the Search Methods

For all search methods, there usually involves the following 6 steps:

Initialization: Initial graph G(V, E) and include some starting states in empty V, which could be or (Bidirectional search or backward search)

Select Vertex: Select states in priority queue Q sorted with some rules.

Apply an Action: Obtain a new state x' from f(x, u).

Insert a Directed Edge into the Graph: If certain algorithm-specific tests are passed, then generate an edge from x to x' for the forward case or an edge from xnew to x for the backward case. If x' is not yet in V , it will be inserted into V.

Check for Solution: Determine whether G encodes a path from to . If there is a single search tree, then this is trivial. If there are two or more search trees, then this step could be expensive.

Return to Step 2: Iterate unless a solution has been found or an early termination condition is satisfied, in which case the algorithm reports failure.

2.3 Discrete Optimal Planning

This section discusses optimal planning problems involving optimizing time, distance, energy consumed.

Formulation 2.2(Discrete Fixed-Length Optimal Planning)

All of the components from Formulation 2.1 will be inherited in this section like . Notably, here is finite.

The number of the stages, K, is defined, which is the exact length of the plan. It can be measured as the number of the actions. is obtained after is applied.

Introduce cost functional:

denote a stage-additive cost (or loss) functional, which is applied to a K-step plan, . This means that the sequence of actions and the sequence of states may appear in an expression of L.

For convenience, let F denote the final stage, (the application of advances the stage to )

The cost term yields a real value for every .

The final term is outside of the sum and is defined as if , and otherwise.

Distinguish

is the cost term after applying at while is the state transition fuction to obtain

2.3.1 Optimal Fixed-Length Plans

This section will mainly discuss the value iteration algorithm, which is to iteratively compute optimal cost-to-go (or cost-to-come) functions over the state space. In some conditions, it can be reduced to Dijkstra algorithm. There are mainly two versions of this algorithm, namely backward value iteration and forward value iteration.

2.3.1.1 Backward value iteration

Firstly, we will introduce a new cost fuctional called , which represents the cost-to-go fuction accumulated through stage k to F. It can be written as the following equation:

This can be converted to the following equation (the proof process is omitted here as the formula will be well understood from its definition):

This produces the recurrence, which can be used to obtain iteratively from . It's like:

may contain the .

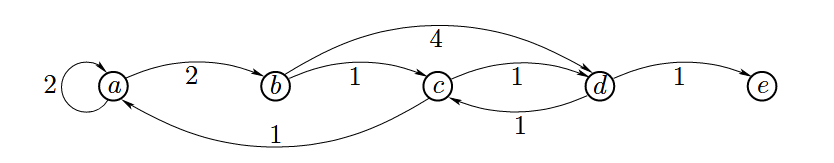

Example 1

Suppose that . Hence, there will be four iterations by constructing .

Firstly, , For state a, b, c, e, they are not in , so each value of them is . For state d, the value is 0.

, can be a, b, c, d, e. Let's assume a as the current state() for instance. , the equation goes to . Here, can be a, b, c, d, e. is the edge from a to . We need to find out the smallest of the five combinations of and . Obviously, all of them is .

Let's take b, c as the , respectively. You can see that . .

, the potential options of . You need to take a,b,c,d,e as to calculate their optimal value . For example, d as . There are five circumstances, in which 3 of them are (a, d, e). So the left two are . So .

In this way can you easily obtain . The results are shown in the following table.

| a | b | c | d | e | |

|---|---|---|---|---|---|

| G₅* | ∞ | ∞ | ∞ | 0 | ∞ |

| G₄* | ∞ | 4 | 1 | ∞ | ∞ |

| G₃* | 6 | 2 | ∞ | 2 | ∞ |

| G₂* | 4 | 6 | 3 | ∞ | ∞ |

| G₁* | 6 | 4 | 5 | 4 | ∞ |

2.3.1.2 Forward value iteration

The ideas from Section 2.3.1.1 may be recycled to yield a symmetrically equivalent method that computes optimal cost-to-come functions from the initial stage.

In the backward case, must be fixed, and in the forward case, must be fixed.

Symmetrically, here we introduce , which denotes the optimal cost-to-come value from stage 1 to k. serves as the same role of . That is

in which is a new function that yields , and for all . Thus, any plans that try to start from a state other than will immediately receive infinite cost.

Likewise, we can get the same equation:

Also the recurrence:

Example 2

We can still use the net in Figure 1, perform the forward iteration:

Suppose K=4, we need to calculate for a, b, c, d, e. Each has 5 options of . For instance, , there exsits a-c, b-c, c-c, d-c, e-c. , . Thus we only need to compare and . The former is smaller, which equals to 5 while the latter is 7.

| a | b | c | d | e | |

|---|---|---|---|---|---|

| C₁* | 0 | ∞ | ∞ | ∞ | ∞ |

| C₂* | 2 | 2 | ∞ | ∞ | ∞ |

| C₃* | 4 | 4 | 3 | 6 | ∞ |

| C₄* | 4 | 6 | 5 | 4 | 7 |

| C₅* | 6 | 6 | 5 | 6 | 5 |

2.3.2 Optimal Plans of Unspecified Lengths

In section 2.3.1 we learn algorithm solving optimal fixed-length plans. However, it is obviously unreasonable. To begin with, we don't know the exact length of the solution. We need to set it in advance, which can be inappropriate. In example 1, we can obtain the optimal path from . But we repeat another redundant iteration in .

So how to address this issue? That is variable-length plan.

The value-iteration method, originally used for fixed-length plans, is generalized for plans of different lengths. There is no upper limit to how long a plan can be, making this approach a true generalization of earlier fixed-length formulations.

How to accomplish this? Here we introduce a special "termination" action, denoted as . This action allows a plan to stop at any state, effectively saying, "We are done." Once this action is applied, the state no longer changes, and no additional costs are incurred. This enables plans of different lengths to be handled uniformly. For example, a two-step plan that reaches the goal can be extended to a longer plan by simply repeating the termination action without changing the cost like .

The termination action is applied when the system has reached a goal state, meaning the current state .

Once the goal is achieved, further actions are unnecessary, and the cost will not increase. So, the system applies the termination action to "stop" further planning or changes in the state.

For iteration going on, we introduce two similar formulas.

For backward value iteration:

This formula calculates the optimal action at a given state :

- : Represents the cost incurred by taking action in state .

- : The optimal cost-to-go function, which estimates the remaining cost to the goal from the next state , the state that results from applying action $u $ to state .

The formula minimizes the total cost, which is the sum of the immediate cost and the cost-to-go from the resulting state. The argmin part means we are selecting the action that yields the lowest total cost.

For forward value iteration:

- : Refers to the state from which action would bring the system into state .

- : The optimal cost-to-come function, analogous to , but in a forward direction. It tells us the best cost incurred to reach from some previous state.

- : Represents the cost of the action leading from the predecessor state to .

In this way can we not rely on the specified . Since we select the action that yields the lowest total cost every iteration.

Distinguish

Key Differences between (2) (4) in fixed-length planning and (5) (6) in variable-length planning

Fixed vs. Variable Length:

- (2)(4) is used in the context of fixed-length planning, where the number of stages is known and the goal is to minimize the cost over a set number of steps.

- (5)(6) is for variable-length planning, where the number of stages is unspecified, and you want to minimize the overall cost-to-go/cost-to-come, with no constraint on the number of steps.

Stage Dependency:

- (2)(4) depends on the stage index (since the cost-to-go depends on the specific stage).

- (5)(6) is independent of any stage index because it is used for unspecified-length plans, where the focus is on minimizing the total cost regardless of how long the plan takes.

Similarity

Both formulas aim to minimize the total cost by selecting the optimal action at each state based on a cost function that combines immediate cost and the future cost-to-go/past cost-to-come. The mechanism for selecting actions is the same—iteratively finding the action that leads to the least total cost.

Example 1 will be changed into:

| a | b | c | d | e | |

|---|---|---|---|---|---|

| G₀* | ∞ | ∞ | ∞ | 0 | ∞ |

| G₋₁* | ∞ | 4 | 1 | 0 | ∞ |

| G₋₂* | 6 | 2 | 1 | 0 | ∞ |

| G₋₃* | 4 | 2 | 1 | 0 | ∞ |

| G₋₄* | 4 | 2 | 1 | 0 | ∞ |

| G* | 4 | 2 | 1 | 0 | ∞ |

2.3.3 Dijkstra Revisited

The key differences between Dijkstra algorithm and forward value iteration algorithm are shown as below:

| Feature | Dijkstra's Algorithm | Forward Value Iteration |

|---|---|---|

| Cost Metric | Minimizes cost-to-come (from start to current state) | Propagates cost-to-come in forward iteration |

| Approach | Greedy, explores states with minimum cost-to-come | Dynamic programming, iterates over all states simultaneously |

| Exploration Strategy | Expands one state at a time based on smallest cost-to-come | Updates all states simultaneously in each iteration |

| Priority | Uses a priority queue to expand least-cost states first | Does not prioritize; updates globally |

| Set of Alive States | Yes, maintains a set of "alive" states (states yet to be finalized) | No, updates all states without maintaining alive states |

| Best Use Case | Finding the shortest path to a goal state | Computing global cost-to-come for all states |

| Efficiency | More efficient for single-goal problems | Less efficient; explores the entire state space |

If Dijkstra’s algorithm seems so clever, then why have we spent time covering the value-iteration method? For some problems it may become too expensive to maintain the sorted queue, and value iteration could provide a more efficient alternative. A more important reason is that value iteration extends easily to a much broader class of problems. Examples include optimal planning over continuous state spaces (Sections 8.5.2 and 14.5), stochastic optimal planning (Section 10.2), and computing dynamic game equilibria (Section 10.5).

Dijkstra’s algorithm belongs to a broader family of label-correcting algorithms, which all produce optimal plans by making small modifications to the general forward-search algorithm.

FORWARD LABEL CORRECTING()

1 Set C(x) = ∞ for all x(except xI), and set C(xI) = 0.

2 Q.Insert(xI)

3 while Q not empty do:

4 x ← GetFirst(Q)

5 for all u in U(x)

6 x' ← f(x, u)

7 if C(x) + l(x, u) < min{C(x'), C(xG)}

8 C(x') ← C(x) + l(x, u)

9 if x' is not xG

10 Q.Insert(x')

Notably, the label-correcting approach uses the cost at the goal state to prune away many candidate paths; this is shown in line 7. Thus, it is only formulated to work for a single goal state; it can be adapted to work for multiple goal states, but performance degrades. The motivation for including in line 7 is that there is no need to worry about improving costs at some state, , if its new cost-to-come would be higher than ; there is no way it could be along a path that improves the cost to go to .

2.4 Using Logic to Formulate Discrete Planning

For many discrete planning problems that we would like a computer to solve, the state space is enormous (e.g., states). Therefore, substantial effort has been invested in constructing implicit encodings of problems in hopes that the entire state space does not have to be explored by the algorithm to solve the problem.

Pros and Cons of logic-based representations:

Such representations were the basis of the majority of artificial intelligence research during the 1950s–1980s.

It is useful for representing certain kinds of planning problems very compactly.

Many discrete planning algorithms are implemented in large software systems. At some point, when these systems solve a problem, they must provide the complete plan to a user, who may not care about the internals of planning. Logic-based representations have seemed convenient for producing output that logically explains the steps involved to arrive at some goal.

Logic-based representations are difficult to generalize.

2.4.1 A STRIPS-Like Representation

STRIPS-like representations have been the most common logic-based representations for discrete planning problems. This refers to the STRIPS system, which is considered one of the first planning algorithms and representations; its name is derived from the STanford Research Institute Problem Solver. There are many variations of STRIPS-like representations. Here is one formulation:

A finite, nonempty set of instances. Instances are just the object existing in the world like books or trees.

A finite, nonempty set of predicates, which are binary-valued (partial) functions of one of more instances. Each application of a predicate to a specific set of instances is called a positive literal. A logically negated positive literal is called a negative literal.

The predicates can form the basic properties or statements of certain instances. For example, a predicate called Under might be used to indicate things like Under(Book, Table) (the book is under the table) or Under(Dirt, Rug). (Here book and table are arguments)

A predicate can be interpreted as a kind of function that yields true or false values;

however, it is important to note that it is only a partial function which needs argument(s) to be inserted. And here instances are the argument(s). It might not be desirable to allow any instance to be inserted as an argument to the predicate.(In other words, some combinations of predicates and instance are obviously true or false.)

Summary: predicates(argument 1, argument 2, argument 3..., argument n) = positive/negative literal (instances are arguments)

A finite, nonempty set of operators, each of which has: 1) preconditions, which are positive or negative literals that must hold for the operator to apply, and 2) effects, which are positive or negative literals that are the result of applying the operator.

An initial set which is expressed as a set of positive literals. Negative literals are implied. For any positive literal that does not appear in , its corresponding negative literal is assumed to hold initially.

A goal set which is expressed as a set of both positive and negative literals.

Summary: preconditions, effects, initial sets and goal sets are all made up of literals.

The process involves:

Initially, we have several instances and define several predicates so as to form literals. We set the initial sets and goal sets. Based on corresponding preconditions, we need to find the operators that produces the effects we want (reach the destination).

Example 3

Imagine a planning problem that involves putting two batteries into a flashlight, as shown in the following figure. The set of instances are

Two different predicates will be defined, On and In, each of which is a partial function on I. The predicate On may only be applied to evaluate whether the Cap is On the Flashlight and is written as On(Cap, Flashlight). The predicate In may be applied in the following two ways: In(Battery1, Flashlight), In(Battery2, F lashlight), to indicate whether either battery is in the flashlight.

Recall that predicates are only partial functions in general. For the predicate In, it is not desirable to apply any instance to any argument. For example, it is meaningless to define In(Battery1, Battery1) and In(F lashlight, Battery2) (they could be included in the model, always retaining a negative value, but it is inefficient).

The initial set is

Based on S, both ¬In(Battery1, F lashlight) and ¬In(Battery2, Flashlight) are assumed to hold. Thus, S indicates that the cap is on the flashlight, but the batteries are outside. The goal state is

which means that both batteries must be in the flashlight, and the cap must be on. The set O consists of the four operators, which are

| Name | Preconditions | Effects |

|---|---|---|

| PlaceCap | ||

| RemoveCap | ||

| Insert(i) |

Here is a plan that reaches the goal state in the smallest number of steps:

In words, the plan simply says to take the cap off, put the batteries in, and place the cap back on.

This example appears quite simple, and one would expect a planning algorithm to easily find such a solution. It can be made more challenging by adding many more instances to I, such as more batteries, more flashlights, and a bunch of objects that are irrelevant to achieving the goal. Also, many other predicates and operators can be added so that the different combinations of operators become overwhelming.

2.4.2 Converting to the State-Space Representation

By converting the logic-based representation to the state-space representation, we can easily use the algorithm introduced previously to solve the problem.

Up to now, the notion of “state” has been only vaguely mentioned in the context of the STRIPS-like representation. Now consider making this more concrete. Suppose that every predicate has arguments, and any instance could appear in each argument. This means that there are complementary pairs, which corresponds to all of the ways to substitute instances into all arguments of all predicates.(obviously there are p predicates, all of which has k places that each has I options(instances))

To express the state, a positive or negative literal must be selected from every complementary pair. For convenience, this selection can be encoded as a binary string by imposing a linear ordering on the instances and predicates.

Using Example 3, the state might be specified in order as

Using a binary string, each element can be “0” to denote a negative literal or “1” to denote positive literal. The encoded state is x = 101 for the formula above.

If any instance can appear in the argument of any predicate, then the length of the string is . The total number of possible states of the world that could possibly be distinguished corresponds to the set of all possible bit strings. This set has size

(Each place has 0 or 1 two options)

We can see that a small number of P and I can generate enormous state spaces, leading inefficient performance of algorithm.

The next step in converting to a state-space representation is to encode the initial state as a string. The goal set, , is the set of all strings that are consistent with the positive and negative goal literals. This can be compressed by extending the string alphabet to include a “don’t care” symbol, .(Namely 1 or 0 in this place are both acceptable.) A single string that has a “0” for each negative literal, a “1” for each positive literal, and a “δ” for all others would suffice in representing any XG that is expressed with positive and negative literals.

Now convert the operators. To apply the search techniques of Section 2.2, note that it is not necessary to determine explicitly in advance for all . Instead, can be computed whenever each is encountered for the first time in the search.

The effects of the operator are encoded by the state transition equation. From a given , the next state, , is obtained by flipping part of the bits(0 → 1 or inversely) when operators applied.

2.5 Logic-Based Planning Methods

This section discusses how to adapt the value-iteration method to work under the logic-based representation, yielding optimal plans.

2.5.1 Searching in a Space of Partial Plans

One alternative to searching directly in X is to construct partial plans without reference to particular states. By using the operator representation, partial plans can be incrementally constructed. The idea is to iteratively achieve required subgoals in a partial plan while ensuring that no conflicts arise that could destroy the solution developed so far.

A partial plan is defined as

A set of operators that need to be applied. If the operators contain variables, these may be filled in by specific values or left as variables. The same operator may appear multiple times in , possibly with different values for the variables.

A partial ordering relation on , which indicates for some pairs that one must appear before other: .

A set of binding constraints, in which each indicates that some variables across operators must take on the same value.

A set of causal links, in which each is of the form and indicates that achieves the literal for the purpose of satisfying a precondition of (In other words, achieved which is the precondition of ).

Example 4 (A Partial Plan)

Each partial plan encodes a set of possible plans. Recall the model from Example 3. Suppose

A sensible ordering constraint is that

A causal link

indicates that the RemoveCap operator achieves the literal , which is a precondition of .

There are no binding constraints for this example. The partial plan implicitly represents the set of all plans for which RemoveCap appears before , under the constraint that the causal link is not violated.

A vertex in the partial-plan search graph is a partial plan, and an edge is constructed by extending one partial plan to obtain another partial plan that is closer to completion. Although the general template is simple, the algorithm performance depends critically on the choice of initial plan and the particular flaw that is resolved in each iteration. One straightforward generalization is to develop multiple partial plans and decide which one to refine in each iteration.

In early works, methods based on partial plans seemed to offer substantial benefits; however, they are currently considered to be not “competitive enough” in comparison to methods that search the state space. One problem is that it becomes more difficult to develop application-specific heuristics without explicit references to states. Also, the vertices in the partial-plan search graph are costly to maintain and manipulate in comparison to ordinary states.